Joshua Bakita

PhD Candidate

Department of Computer Science

UNC Chapel Hill

Introduction

I do research to make GPUs fast and predictable in autonomous systems, like self-driving cars. See the below publications for my latest academic work, and see my CV for details on my industry contributions at Waymo, GM Research, Microsoft, and others.

CV, Research Statement, Google Scholar, LinkedIn, GitHub, Instagram, X, Blog

Languages

- C

- C#

- C++

- CUDA

- Java

- JavaScript

- Python

- Haskell

- Rust

- Go

- ARMv7 Asm

- Verilog

- Chisel

- Bash

- FORTRAN

- Erlang

- SQL

- HTML

- CSS

- ...

Teaching

COMP 211: Systems Fundamentals

Taught and developed a curriculum for COMP 211, the first systems class and third overall in the undergraduate CS sequence. No graduate student in the department had previously taught more than 45 students at a time, making this class three times larger than the previous record. The class focused on a robust coverage of the fundamantals of a computer system, from hardware constraints to operating system abstractions. Key learning objectives included a robust understanding of C; memory layouts, addressing, and pointers; signals, syscalls, and file I/O; software performance optimization; basic shell navigation and tools. See the course website for general information, and the Final Exam Study Guide for a comprehensive list of all the topics covered.

Awards

Best Paper

For my work Hardware Compute Partitioning on NVIDIA GPUs for Composable Systems. One of only three papers to win awards at this top-three conference in real-time systems.

Outstanding Paper

For my work Hardware Compute Partitioning on NVIDIA GPUs. One of only three papers to win awards at this top-three conference in real-time systems.

Teaching Assistant of the Year

Chosen as the best teaching assistant by faculty and students in the Computer Science Department at UNC. Setting a new record, nearly 30% of my 150-person class nominated me for this award. On an anonymous feedback form, one student says "You are a wonderful person who cares about his students! Thank you so much for adding some sunlight into the spring of my senior year!" And during the awards ceremony, the instructor Dr. Diane Pozefsky opened her remarks by saying that "Josh saved the course for the students." Another student comments, "He was super understanding, helpful, knowledgable, and very kind. He always went out of his way to help students and his calm and helpful responses are always reliable and good. He shows a lot of great enthusiasm for the material and is a great person."

More Information

Publications

Hardware Compute Partitioning on NVIDIA GPUs for Composable Systems

Proceedings of the 37th Euromicro Conference on Real-Time Systems (ECRTS), pp. 21:1-21:25, July 2025. Winner, best paper award.

Abstract: "As GPU-using tasks become more common in embedded, safety-critical systems, efficiency demands necessitate sharing a single GPU among multiple tasks. Unfortunately, existing ways to schedule multiple tasks onto a GPU often either result in a loss of ability to meet deadlines, or a loss of efficiency. In this work, we develop a system-level spatial compute partitioning mechanism for NVIDIA GPUs and demonstrate that it can be used to execute tasks efficiently without compromising timing predictability. Our tool, called nvtaskset, supports composable systems by not requiring task, driver, or hardware modifications. In our evaluation, we demonstrate sub-1-μs overheads, stronger partition enforcement, and finer-granularity partitioning when using our mechanism instead of NVIDIA's Multi-Process Service (MPS) or Multi-instance GPU (MiG) features."

PDF Slides ArtifactThe Advantage of the GPU as a Real-Time AI Accelerator

Real-Time Systems (Journal), June 2025.

Abstract: "Integrating AI into real-world systems such as autonomous vehicles or interactive assistants requires the use of compute accelerators. Traditional processors such as x86 or ARM CPUs are insufficient. Unfortunately, real-world systems have responsiveness requirements, and research is underdeveloped on guaranteeing such responsiveness for accelerator-using systems. One constraint has been uncertainty about what sort of accelerator is best for such systems. In this paper, we argue that researchers should focus on the GPU as the accelerator of choice for embedded real-time AI workloads. We argue that GPUs are already being widely adopted, provide leading compute density, and are architecturally well-suited for real-world, real-time systems."

PDFWork in Progress: Increasing Schedulability via on-GPU Scheduling

Proceedings of the 31st Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 422-425, May 2025.

Abstract: "GPUs are increasingly needed to run a variety of tasks in embedded systems, from object recognition to conversational chat. Some of these tasks are safety-critical, real-time tasks, where completing each by its deadline is essential for system safety. To meet the practical constraints of real-world systems, these tasks much also be run efficiently. Unfortunately, current techniques to schedule GPU-using tasks onto a single GPU while respecting deadlines impart high overheads, leading to inefficiency and substantial capacity loss during formal analysis. We address this problem by moving GPU scheduling from the CPU to the GPU. Our approach limits overheads, increasing the proportion of CPU tasks which can meet their deadlines by as much as 12.1% while increasing available GPU capacity."

PDF Slides PosterConcurrent FFT Execution on GPUs in Real-Time

Proceedings of the 33rd Euromicro International Conference on Parallel, Distributed, and Network-Based Processing (PDP), pp. 154-161, Mar 2025.

Abstract: "Fourier transforms are vital for a broad range of signal-processing applications. Accelerating FFTs with GPUs offers an orders-of-magnitude improvement vs. CPU-only FFT computation. However, two problems arise when executing FFT tasks with other GPU work. First, concurrent GPU use introduces unpredictability in the form of lengthy response times. Second, it is unclear how to best parameterize and schedule FFT tasks to meet the throughput and timeliness constraints of real-time signal processing. This work investigates how FFT and other GPU-using tasks can concurrently access a GPU while maintaining bounded response-time guarantees without sacrificing throughput. In our experiments, the techniques proposed by this work result in an up to 17% improvement in worst-case FFT execution times."

PDFDemystifying NVIDIA GPU Internals to Enable Reliable GPU Management

Proceedings of the 30th Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 294-305, May 2024.

Abstract: "As GPU-dependent workloads from work on artificial intelligence and machine learning increasingly come to embedded, safety-critical systems---such as self-driving cars---real-time predictability for GPU-using tasks becomes essential. This paper identifies flaws in three different real-time GPU management approaches that are largely the result of incomplete information about GPU internals. Details concerning this missing information are then elucidated via experiments. Based on this information, key rules of GPU scheduling are identified and shown necessary for safe GPU management."

PDF Slides ArtifactHardware Compute Partitioning on NVIDIA GPUs

Proceedings of the 29th Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 54-66, May 2023. Winner, outstanding paper award.

Abstract: "Embedded and autonomous systems are increasingly integrating AI/ML features, often enabled by a hardware accelerator such as a GPU. As these workloads become increasingly demanding, but size, weight, power, and cost constraints remain unyielding,

ways to increase GPU capacity are an urgent need.

In this work, we provide a means by which to spatially partition the computing units of NVIDIA GPUs transparently, allowing oft-idled capacity to be reclaimed via safe and efficient GPU sharing.

Our approach works on any NVIDIA GPU since 2013, and can be applied via our easy-to-use, user-space library titled libsmctrl. We back the design of our system with deep investigations into the hardware scheduling pipeline of NVIDIA GPUs. We provide guidelines for the use of our system, and demonstrate it via an object detection case study using YOLOv2."

PDF Slides Recorded Presentation Artifact libsmctrl Repository

Enabling GPU Memory Oversubscription via Transparent Paging to an NVMe SSD

Proceedings of the 43rd Real-Time Systems Symposium (RTSS), pp. 370-382, Dec 2022.

Abstract: "Safety-critical embedded systems are experiencing increasing computational and memory demands as edge-computing and autonomous systems gain adoption. Main memory (DRAM) is often scarce, and existing mechanisms to support DRAM oversubscription, such as demand paging or compile-time transformations, either imply serious CPU capacity loss, or put unacceptable constraints on program structure. This work proposes an alternative: paging GPU rather than CPU memory buffers directly to permanent storage to enable efficient and predictable memory oversubscription. Most embedded systems share a single DRAM for both CPU and GPU, so this approach does not only benefit GPU tasks. This paper focuses on why GPU paging is useful and how it can be efficiently implemented. Specifically, a GPU paging implementation is proposed as an extension to NVIDIA's embedded Linux GPU drivers. In experiments reported herein, this implementation was seen to be three times faster end-to-end than demand paging, with 80% lower overheads. It also achieved speeds above the fastest prexisting Linux userspace I/O APIs with low DRAM and bus interference to CPU tasks---at most a 17% slowdown.

PDF Slides Artifact

Minimizing DAG Utilization by Exploiting SMT

Proceedings of the 28th Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 267-280, May 2022.

Abstract: "Parallel workloads are commonly modeled as directed acyclic graphs (DAGs). While DAG scheduling is an important tool, it is plagued by capacity loss; it is not uncommon to see half of a platform go unused. Here this loss is attacked from a new direction: reducing per-DAG utilization prior to assigning computing cores to a DAG. Specifically, simultaneous multithreading (SMT) is used to schedule individual nodes of a DAG task in parallel on the same physical computing core. An optimization program is given that applies SMT to a DAG in a way that minimizes total utilization without compromising correctness. Results for both individual DAGs and systems of DAGs are evaluated using both a large-scale study of synthetic DAGs and a case study. Optimal use of the program can reduce DAG utilization and required core counts by over 40% in the best cases and by 25% in nearly half of cases. Runtime requirements for for the optimization program are considered, and a tunable parameter is provided to make tradeoffs between runtime and optimality, allowing even DAGs with 500 nodes to benefit."

PDF Recorded Presentation

TimeWall: Enabling Time Partitioning for Real-Time Multicore+Accelerator Platforms

Proceedings of the 42nd Real-Time Systems Symposium (RTSS), pp. 455-468, Dec 2021.

Abstract: "Across a range of safety-critical domains, an evolution is underway to endow embedded systems with "thinking" capabilities by using artificial-intelligence (AI) techniques. This evolution is being fueled by the availability of high-performance embedded hardware, typically multicore machines augmented with accelerators. Unfortunately, existing software certification processes rely on time partitioning to isolate system components, and this sense of isolation can be broken by accelerator usage. To address this issue, this paper presents CONCE^RT, a time-partitioning framework for multicore+accelerator platforms. When applied alongside existing methods for alleviating spatial interference, CONCE^RT can help enable component-wise certification on multicore+accelerator platforms. The challenges in realizing a CONCE^RT implementation are discussed in detail in this paper. Additionally, the temporal isolation CONCE^RT affords is examined experimentally, including via a case study of a computer-vision perception application, on a real platform."

PDF Recorded Presentation

Simultaneous Multithreading in Mixed-Criticality Real-Time Systems

Proceedings of the 27th Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 278-291, May 2021.

Abstract: "Simultaneous multithreading (SMT) enables enhanced computing capacity by allowing multiple tasks to execute concurrently on the same computing core. Despite its benefits, its use has been largely eschewed in work on real-time systems due to concerns that tasks running on the same core may adversely interfere with each other. In this paper, the safety of using SMT in a mixed-criticality multicore context is considered in detail. To this end, a prior open-source framework called MC² (mixed criticality on multicore), which provides features for mitigating cache and memory interference, was re-implemented to support SMT on an SMT-capable multicore platform. The creation of this new, configurable MC² variant entailed producing the first operating-system implementations of several recently proposed real-time SMT schedulers and tying them together within a mixed-criticality context. These schedulers introduce new spatialisolation challenges, which required introducing isolation at both the L2 and L3 cache levels. The efficacy of the resulting MC² variant is demonstrated via three experimental efforts. The first involved obtaining execution data using a wide range of benchmark suites, including TACLeBench, DIS, SD-VBS, and synthetic microbenchmarks. The second involved conducting a large-scale overhead-aware schedulability study, parameterized by the collected benchmark data, to elucidate schedulability tradeoffs. The third involved experiments involving case-study task systems. In the schedulability study, the use of SMT proved capable of increasing platform capacity by an average factor of 1.32. In the case-study experiments, deadline misses of highly critical tasks were never observed."

PDF Artifact Slides Recorded Presentation

Statically Optimal Dynamic Soft Real-Time Semi-Partitioned Scheduling

Abstract: "Semi-partitioned scheduling is an approach to multiprocessor real-time scheduling where most tasks are fixed to processors, while a small subset of tasks is allowed to migrate. This approach offers reduced overhead compared to global scheduling, and can reduce processor capacity loss compared to partitioned scheduling. Prior work has resulted in a number of semi-partitioned scheduling algorithms, but their correctness typically hinges on a complex intertwining of offline task assignment and online execution. This brittleness has resulted in few proposed semi-partitioned scheduling algorithms that support dynamic task systems, where tasks may join or leave the system at runtime, and few that are optimal in any sense. This paper introduces EDF-sc, the first semi-partitioned scheduling algorithm that is optimal for scheduling (static) soft real-time (SRT) sporadic task systems and allows tasks to dynamically join and leave. The SRT notion of optimality provided by EDF-sc requires deadline tardiness to be bounded for any task system that does not cause over-utilization. In the event that all tasks can be assigned as fixed, EDF-sc behaves exactly as partitioned EDF. Heuristics are provided that give EDF-sc the novel ability to stabilize the workload to approach the partitioned case as tasks join and leave the system."

Simultaneous Multithreading Applied to Real Time

Abstract: "Existing models used in real-time scheduling are inadequate to take advantage of simultaneous multithreading(SMT), which has been shown to improve performance in many areas of computing, but has seen little applicationto real-time systems. The SMART task model, which allows for combining SMT and real time by accounting forthe variable task execution costs caused by SMT, is introduced, along with methods and conditions for scheduling SMT tasks under global earliest-deadline-first scheduling. The benefits of using SMT are demonstrated througha large-scale schedulability study in which we show that task systems with utilizations up to 30% larger thanwhat would be schedulable without SMT can be correctly scheduled."

Re-thinking CNN Frameworks for Time-Sensitive Autonomous-Driving Applications: Addressing an Industrial Challenge

Abstract: "Vision-based perception systems are crucial for profitable autonomous-driving vehicle products. High accuracy in such perception systems is being enabled by rapidly evolving convolution neural networks (CNNs). To achieve a better understanding of its surrounding environment, a vehicle must be provided with full coverage via multiple cameras. However, when processing multiple video streams, existing CNN frameworks often fail to provide enough inference performance, particularly on embedded hardware constrained by size, weight, and power limits. This paper presents the results of an industrial case study that was conducted to re-think the design of CNN software to better utilize available hardware resources. In this study, techniques such as parallelism, pipelining, and the merging of per-camera images into a single composite image were considered in the context of a Drive PX2 embedded hardware platform. The study identifies a combination of techniques that can be applied to increase throughput (number of simultaneous camera streams) without significantly increasing per-frame latency (camera to CNN output) or reducing per-stream accuracy."

Avoiding Pitfalls when Using NVIDIA GPUs for Real-Time Tasks in Autonomous Systems

Abstract: "NVIDIA's CUDA API has enabled GPUs to be used as computing accelerators across a wide range of applications. This has resulted in performance gains in many application domains, but the underlying GPU hardware and software are subject to many non-obvious pitfalls. This is particularly problematic for safety-critical systems, where worst-case behaviors must be taken into account. While such behaviors were not a key concern for earlier CUDA users, the usage of GPUs in autonomous vehicles has taken CUDA programs out of the sole domain of computer-vision and machine- learning experts and into safety-critical processing pipelines. Certification is necessary in this new domain, which is problematic because GPU software may have been developed without any regard for worst-case behaviors. Pitfalls when using CUDA in real-time autonomous systems can result from the lack of specifics in official documentation, and developers of GPU software not being aware of the implications of their design choices with regards to real-time requirements. This paper focuses on the particular challenges facing the real-time community when utilizing CUDA-enabled GPUs for autonomous applications, and best practices for applying real-time safety-critical principles."

Scaling Up: The Validation of Empirically Derived Scheduling Rules on NVIDIA GPUs

Abstract: "Embedded systems augmented with graphics pro- cessing units (GPUs) are seeing increased use in safety-critical real-time systems such as autonomous vehicles. The current black-box and proprietary nature of these GPUs has made it difficult to determine their behavior in worst-case scenarios, threatening the safety of autonomous systems. In this work, we introduce a new automated validation framework to analyze GPU execution traces and determine if behavioral assumptions inferred from black-box experiments consistently match behav- ior of real-world devices. We find that the behaviors observed in prior work are consistent on a small scale, but the rules do not stretch to significantly older GPUs and struggle with complex GPU workloads."

PDF Slides

Personal Project Archive

SafeShare

Built a platform to share vehicles using the security of the Ethereum blockchain as the trust basis instead of a physical entity. Also uses the APIs from Smartcar to allow unattended unlocking and ignition for shared vehicles to eliminate most overhead. Prototype implementation won awards from Coinbase, Smartcar, Contrary Capital, and HackDuke at HackDuke 2017.

Landing Page

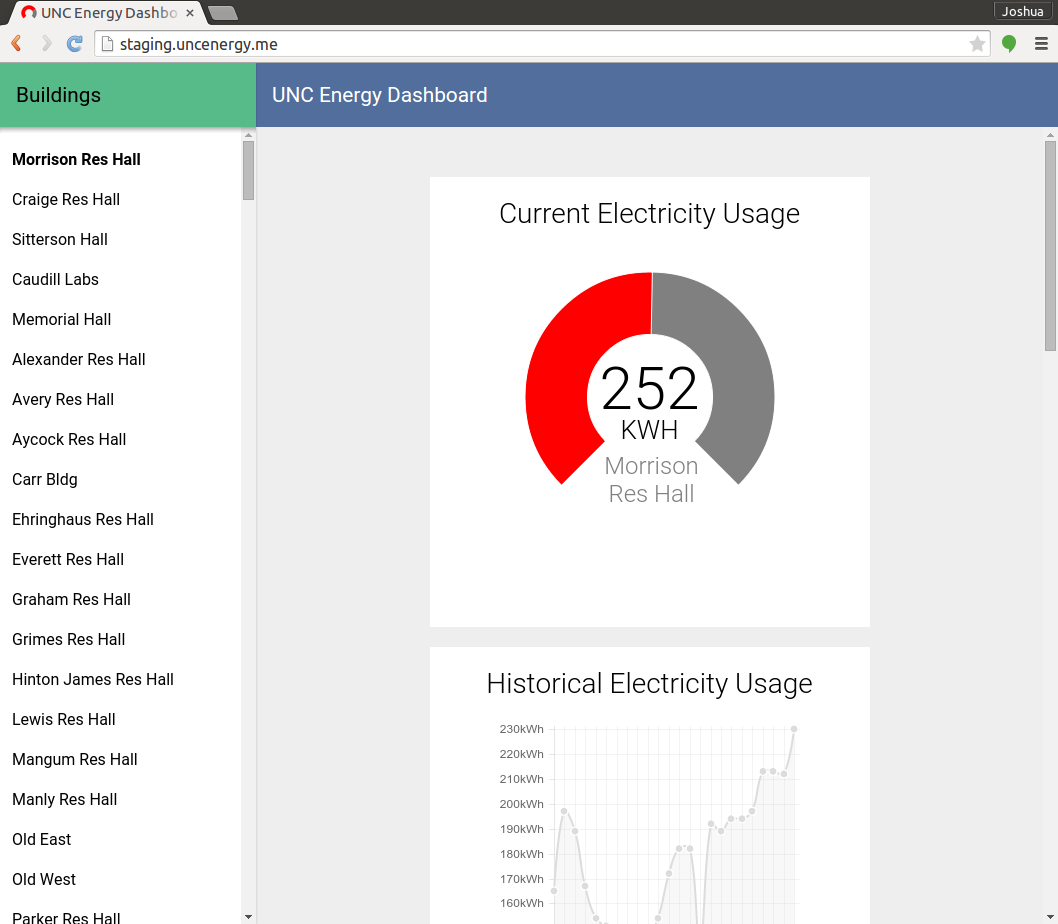

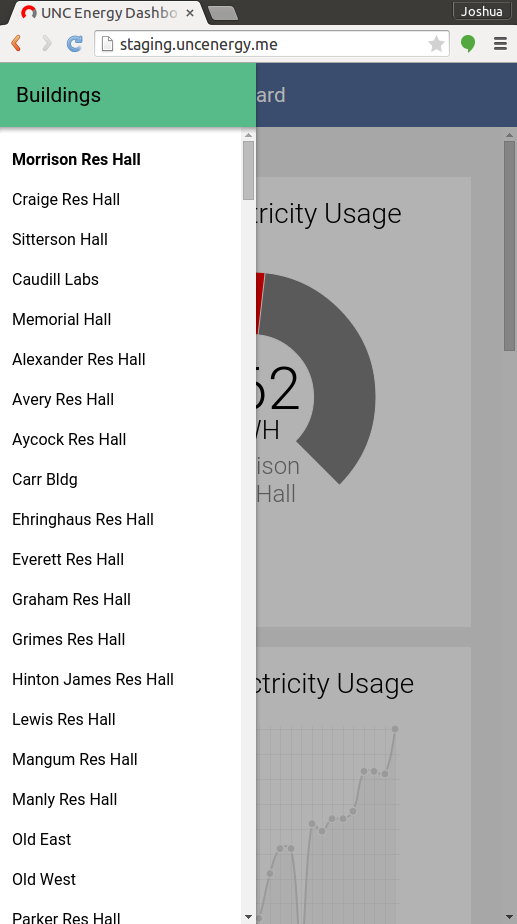

UNC Energy Dashboard

Built a website to display energy usage data for every building at the University of North Carolina at Chapel Hill. Features include:

- Displays electricity, steam, and chilled water usage data in realtime

- Dynamic updates using AJAX with a REST API

- Live and historical data

- Dynamic web page layout

- Works on mobile or desktop

Prototype implementation won an award from Microsoft at HackNC 2014

GitHub Repo Homepage (Note: changes to HTML shadow DOM have broken current deployment)0 A.D. Empires Ascendant Multiplayer Lobby

Managed a small team to build an online realtime multiplayer matchmaking lobby and ranking system. Features include:

- Real time chat with IRC-like command system

- Detailed game listing and join system

- User list with profiles

- SHA256 PBKDF2 secure password hashing with salting

- Leaderboard using the ELO ranking algorithm

- UPnP port negotiation system

- Uses XMPP protocol with custom-built extensions and encryption

Share Sphero App

Built a Node.js app using Websockets for realtime multi-user control of the Sphero robot

- Low-latency cross-platform communication using Websockets

- Shared Javascript codebase across client and server

- Built as part of a collaboration between Microsoft and Orbotix

- Uses Google's Polymer framwork and the PeerJS library

Pebble Accelerometer Log

Built an application stack to gather long periods of continuous accelerometer readings

- Low-power, interrupt-based, realtime collection system on Pebble

- Custom designed lossless compression for data storage and transmission

- Developed an Android App in Java and a Pebble App in C

- Worked in collaboration with UNC's School of Geriatric Medicine

- Used by Alzheimer's researchers at the University of Twente